The catalyst 6880-X has been here for a while now and has already been announced for End-of-Life in October 2019. Last day of support will be Oct 2025. Before that time customers are urged to replace the platform. Replacement for this platform will be the Catalyst 9500 series with all of its flavors.

I’m writing this, because I found it very difficult to find a suitable answer for my question based on oversubscription mode and performance mode. Does switching between these mode in production cause an outage, does the switch need to reboot, does the ASIC’s need to be re-programmed?

I have the following hardware at my disposal:

Cisco IOS Software, c6880x Software (c6880x-ADVENTERPRISEK9-M), Version 15.2(1)SY7, RELEASE SOFTWARE (fc1)

Technical Support: http://www.cisco.com/techsupport

Copyright (c) 1986-2018 by Cisco Systems, Inc.

Compiled Thu 05-Jul-18 04:32 by prod_rel_team

ROM: System Bootstrap, Version 15.1(02)SY01 [ Rel 1.1], RELEASE SOFTWARE

BOOTLDR:

TSTKSL001 uptime is 3 years, 42 weeks, 7 hours, 13 minutes

Uptime for this control processor is 42 weeks, 6 days, 22 hours, 39 minutes

System returned to ROM by Admin requested switchover during ISSU

System restarted at 19:53:02 CEST Sat Jun 8 2019

System image file is "bootdisk:c6880x-adventerprisek9-mz.SPA.152-1.SY7.bin"

Last reload reason: - From Active Switch. reload peer unit

<output omitted>

Cisco C6880-X-LE ( Intel(R) Core(TM) i3- CPU @ 2.00GHz ) processor (revision ) with 2997247K/409600K bytes of memory.

Processor board ID SAL212501Z6

Processor signature 0xA7060200

Last reset from power-on

70 Virtual Ethernet interfaces

1 Gigabit Ethernet interface

64 Ten Gigabit Ethernet interfaces

1966064K bytes of USB Flash bootdisk (Read/Write)

Configuration register is 0x2102------------------ show module switch all ------------------

Switch Number: 1 Role: Virtual Switch Standby

---------------------- -----------------------------

Mod Ports Card Type Model Serial No.

--- ----- -------------------------------------- ------------------ -----------

3 20 DCEF-X-LE 16P SFP+ Multi-Rate C6880-X-LE-16P10G JAF78470MK5

5 20 6880-X-LE 16P SFP+ Multi-Rate (Hot) C6880-X-LE-SUP SAL7436237A

Switch Number: 2 Role: Virtual Switch Active

---------------------- -----------------------------

Mod Ports Card Type Model Serial No.

--- ----- -------------------------------------- ------------------ -----------

3 20 DCEF-X-LE 16P SFP+ Multi-Rate C6880-X-LE-16P10G SAG629470NY

5 20 6880-X-LE 16P SFP+ Multi-Rate (Active) C6880-X-LE-SUP SAL73619574To talk about oversubscription and performance mode we need to take a brief look at what they actually mean.

:: Oversubscription

Most of the catalyst platforms deliver line rate speed at 100Mb, 1Gb, 10Gb, 40 or 100Gb. Line rate is a Layer-1 terminology which essentially means the how fast hardware can put bits in the wire. But is doesn’t mean that this speed will be reached over the box from Layer-1 to Layer-3. It depends strongly on how hardware interfaces are connected in a switch, how many of these interfaces are connected and how fast the bus can transfer cascaded bits, frames and packets.

With more complex switches vendors like to refer to a switch fabric or back plane. A back plane can be translated as the big hardware bus interface where all end ports connect to. The backplane can have various throughput speeds to transfer all the data in and out.

When the backplane delivers 1:1 throughput (48x 1Gb/s ports = backplane of 960Gb/s(full duplex)) we talk about non-blocking. We have to calculate full-duplex, otherwise it won’t be non-blocking.

When the backplane delivers 2:1 throughput (48x 1Gb/s ports = backplane of 480Gb/s) we talk about oversubscription or oversubscribed.

In Utopia when all ports ask the maximum line rate at the same time, they would get 500Mb/s throughput each. With an oversubscripted interface throughput can shift over the backplane, still delivering line rate as long as not all interfaces require the same amount of throughput at the same time.

For heavy duty switches or DC switches I would not suggest using oversubscribed switches, linecards or fabric cards. Non-blocking is very recommended in DC environments.

Now back to the CAT6880-X…

:: Performance mode

Actually I’ve already given the answer for Performance mode. It’s the same as non-blocking thus allowing line rate speeds and throughput full duplex to pass in and out of the switch.

:: Why switch between these modes?

The supervisors and linecards in my example have each 16 SFP+ Multi-rate ports onboard. You would have 64 10Gb port to use. Since we use VSS it’s is required to have performance mode enabled on the switch were the VSL link are connected to. Cisco documents tell you that this is recommended. When they say that it actually means the following:

We recommend the feature to be turned off MEANS We order you to turn off this feature otherwise you won’t receive support until you revert back to the recommended settings.

Please be aware of this.

Now with performance mode we can only use 8 ports of the 16 ports available. Why? We have to dive into the architectuur of the hardware.

Quote from the whitepapers:

“Cisco is extending the scale, performance, and capabilities of the venerable Cisco Catalyst 6000 Series with the introduction of the new Catalyst 6800 Series. The new Catalyst 6880-X chassis provides extremely high levels of scalability and performance, with the size and economics of an innovative extensible fixed architecture.

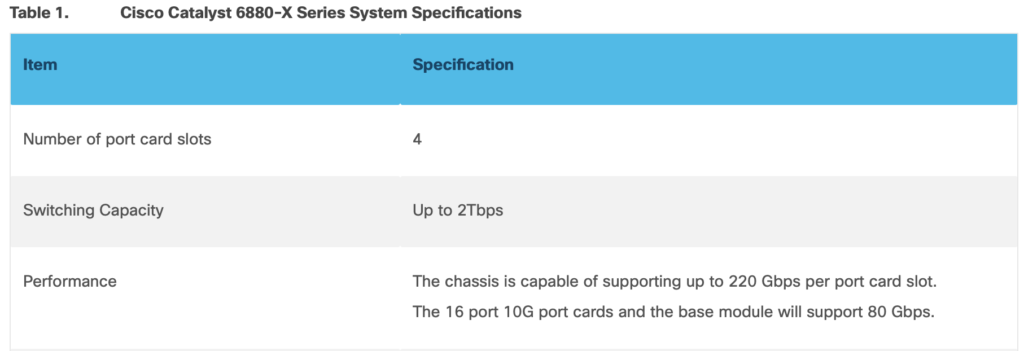

The new Catalyst 6880-X chassis is capable of delivering up to 220Gbps of per-slot bandwidth. This translates to a system capacity of up to approximately 2 Tbps.”

We have 16 10Gb ports per line card. The 220Gb/s slot can accept two linecards in it’s bus. Each linecard support up to 80Gb/s.

We can only assume that this calculation is based on full duplex!

That would be 160Gb/s simplex or 320Gb/s full duplex. OMG! It’s oversubscribed. To allow line rate non-blocking we must disable ports from usage. And that’s where Performance mode comes into play.

When enabling Performance mode ports will be disabled. Both line cards have 2 ASIC group (or port-groups) per linecard. You can disable or enable the required mode per port-group per linecard.

Here are some examples:

Port-group 1: Performance mode

Port-group 2: Oversubscription mode

Port-group 1: 4 usable ports (4x 10Gbx2=80Gb/s)

Port-group 2: 8 usable ports (8x 10Gbx2=160Gb/s)

Total: 80Gb/s per linecard. Port-group one is 1:1 and port-group 2 is 2:1

Port-group 1: Oversubscription mode

Port-group 2: Oversubscription mode

Port-group 1: 8 usable ports (8x 10Gbx2=160Gb/s)

Port-group 2: 8 usable ports (8x 10Gbx2=160Gb/s)

Total: 80Gb/s per linecard. Both port-groups are oversubscribed 2:1

Port-group 1: Performance mode

Port-group 2: Performance mode

Port-group 1: 4 usable ports (4x 10Gbx2=80Gb/s)

Port-group 2: 4 usable ports (4x 10Gbx2=80Gb/s)

Total: 80Gb/s per linecard. 160Gb/s throughput.

Oversubscribed in a 2:1 relation

:: Does converting these mode cause impact?

Let’s first distinct the two supervisor options for the 6880-X platform:

- Sup6T

- Sup2T

With the SUP6T architecture and converting from the default oversubscription mode to Performance mode: You’ll need to reset the whole VSS system according to the Configuration Guide. Yaykes! Please read the SUP6T architecture and specifically sector VSS.

With the SUP2T there is actually nothing written about moving from one mode to the other. At least I couldn’t find it. There is nothing about convert mode in the documentation when it comes to the SUP2T. Time for a TAC question!

Filed in a TAC case Prio 4 to ask the question. Luckily Cisco TAC is very fast in answering Prio 4 questions. The TAC engineer wasn’t sure either, so he tested it for me in a testlab.

Result: No ports going down and up and no VSS system reload necessary. Cool!

BUT: as soon as I entered the commands per switch and per slot some things happened.

Ports went down, failover happened on the connected cluster devices.

What happened?

Well I was converting from performance mode to oversubscription mode of one of the port-groups on the linecards (No VSL links attached). The best move you can make. As TAC mentioned ports don’t go down and VSS doesn’t need a reload. He was right.

Ports went down because a failover was triggered on the WLC member. The ASIC (port-group) was re-programmed and caused a slight interuption on the dataplane. This happens quite fast, but not fast enough to avoid WLC Clusters failovers.

WLC’s use keep-alives to their gateways to determine the overall health of a cluster. When the keep-alived fail the member of the cluster is deemed unhealthy and performs a failover. The former secondary member will reload to be put back into the cluster properly.

Then we see ports going down and up again. We also have a Firepower 4120 cluster connected to one of those port-groups and these didn’t have any issues. Firepower 4120 cluster is active/active on data plane level, so the traffic will be directed temporary to the other FPR members.

:: Conclusion

So bottomline, Does converting modes in port-groups on the Cat 6880-X create an impact?

Yes and No, but it depends on which type of platform and which type of cluster you have connected to the port-groups. Also it’s important how these are connected in the VSS system (straight or cross-connect). Cross-connecting those devices wouldn’t have caused a failover in the first place as the gateway would always be available in the port-channels. We did not have these WLC’s cross-connected.

Luckily SSO save the day and service was not impacted at all. Now we know that the port-groups are re-programmed and cause a little connectivity disruption when this happens.

:: Converting in action

TSTKSL001#sh hw-module switch 2 slot 3 oversubscription

port-group oversubscription-mode

1 disabled

2 disabled

TSTKSL001#conf t

TSTKSL001(config)#hw-module switch 2 slot 3 oversubscription port-group 2

WARNING: Switch to MUX mode on switch 2 module 3 port-group 2.

103508: Jan 25 18:07:11.657 CET: %C6K_PLATFORM-SW2-6-VSS_OVERSUBSCRIPTION_TEN_GIG: Ports 13, 14, 15, 16 of switch 2, slot 3 will be restored to previous state, module bandwidth oversubscription is possible.

Removing lan queueing policy "uplink_input" on Te2/3/9

Removing lan queueing policy "uplink_input" on Te2/3/10

Removing lan queueing policy "uplink_input" on Te2/3/12

Removing lan queueing policy "uplink_output" on Te2/3/9

Removing lan queueing policy "uplink_output" on Te2/3/10

Removing lan queueing policy "uplink_output" on Te2/3/12And there goes the WLC member…

103509: Jan 25 18:08:09.020 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to down

103510: Jan 25 18:08:09.088 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to down

103511: Jan 25 18:08:16.137 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to up

103512: Jan 25 18:08:16.237 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to up

103513: Jan 25 18:08:30.134 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to down

103514: Jan 25 18:08:30.230 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to down

103515: Jan 25 18:10:51.622 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to up

103516: Jan 25 18:10:51.722 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to up

103517: Jan 25 18:10:59.486 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to down

103518: Jan 25 18:10:59.566 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to down

103519: Jan 25 18:11:06.870 CET: %LINK-SW2-3-UPDOWN: Interface Port-channel110, changed state to up

103520: Jan 25 18:11:06.970 CET: %LINEPROTO-SW2-5-UPDOWN: Line protocol on Interface Port-channel110, changed state to up

TSTKSL001(config)#do show hw-module switch 2 slot 3 oversubscription

port-group oversubscription-mode

1 disabled

2 enabledI’ve performed this for 4 port-groups on the VSS cluster. Results were all the same.

Hope you’ve like the post and if you have any questions… 42 would be the answer.